Low-Cost Sensors Enable New Possibilities for Advanced Systems in Poultry Management and Processing

Advances in consumer technology have allowed researchers to explore a new range of possibilities for low-cost advanced sensor systems for poultry processing and management, according to Colin Usher of the Georgia Tech Research Institute’s Food Processing Technology Division.Advanced automation and robotics systems for manufacturing have been expensive to develop, with final price tags ranging from $50,000 to over $500,000 due in large part to the high cost of the sensors. For poultry companies to be able to justify implementing these types of systems in processing plants, costs need to be significantly lower.

Fortunately, recent developments in consumer technology have brought to market several low-cost sensing solutions. This dynamic shift has allowed researchers at the Georgia Tech Research Institute’s Food Processing Technology Division (FPTD) to explore a new range of possibilities for low-cost advanced sensor systems for poultry processing and management.

One such example of a newly available low-cost sensor is the Nintendo Wii video game controller that uses accelerometers and gyros based on advanced inertial navigation systems from military aircraft such as the USAF C-141. A $30 controller now contains the same sensors that previously cost thousands of dollars (albeit with a sacrifice in accuracy).

Most recently, these sensors can be purchased in single units for as little as $10. Microsoft changed the playing field with the release of a 3D sensor called the Kinect for $199. This device was originally developed for use with their video gaming system, the XBOX 360, but was almost immediately modified by hackers to work with a computer.

A community of software developers quickly formed that used the Kinect to implement applications ranging from simple 3D scanning to advanced sensing for robotics. Recognizing its potential, Microsoft then released a Kinect sensor for the PC along with a software development kit and a commercial license to allow fordevelopment of commercial applications outside of their core video game space.

The Kinect sensor uses a structured light approach to generate a 3D image. This is achieved by using a projector to project an infrared light pattern onto objects in the sensor’s field of view. This pattern, much like a camouflage pattern, is then processed and deformations identified in the pattern allow the Kinect to generate a 3D image.

Earlier this year, Microsoft released the second generation of their Kinect sensor, dubbed Kinect V2. The Kinect V2 is a next generation time-of-flight (TOF) camera that boasts a resolution of 512×424 pixels and a cost of $199. In comparison, the FPTD research team purchased a TOF camera with a resolution of 320×240 pixels in 2010 for a whopping $8,000.

This represents a reduction in cost of more than 97 per cent! Generally, a TOF camera works by modulating a light source and calculating the time it takes for that light to reflect off an object and return to the sensor.

Distance is resolved based on the known speed of light. Light will arrive back to the sensor later for objects farther away than for objects that are closer. TOF imaging has benefits over the structured light approach in the sensors ability to operate effectively in non-uniform or ambient lighting environments.

FPTD researchers thoroughly tested both Kinect sensors and the commercial TOF camera to establish accuracy and potential drawbacks of each sensor, with an eye toward potential applications in poultry processing. What the research teamthat the low-cost Kinect sensors are indeed a viable alternative with accuracy and operation on par with the much higher cost sensors.

Ongoing Research Using Kinect Sensors

Currently, the Kinect sensors are being leveraged heavily for two projects: Advanced Sensing and Grasping and Growout House Robotics. The Advanced Sensing and Grasping project involves development of algorithms to automatically sense poultry parts such as wings, breasts, legs, thighs, etc., and detect locations for robotic manipulators to grasp or operate on the parts.

Chicken can be both singulated on a belt or batched together in bins. 3D information from the Kinect is used to assist in both the detection of the parts and the determination of approach vectors for robotic end effectors.

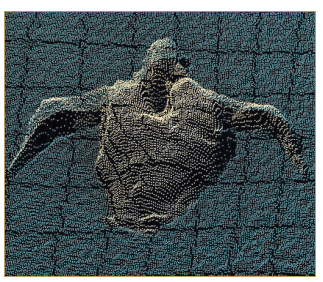

Researchers have collected sample data from a singulated bird and batched birds. A sample 3D point cloud of a single bird is shown below. For the Growout House Robotics project, the Kinect sensor is mounted on top of a mobile ground-based robot.

The robot was recently operated in an experimental growout house, where 3D data was collected for an entire growout cycle. Researchers plan to use the data for automation tasks, allowing the robot to autonomously navigate through a house among chickens. They are also exploring methods of characterising the growth of the chickens based on the 3D data.

A sample 3D image taken from this testing is shown above. Sensors that previously were very high-cost components of automation and robotic systems are now much more economical. Researchers hope that this introduces new opportunities for more advanced systems development along the entire poultry production and processing chain.

January 2015